OpenSoundControl Application Areas

Matt Wright, 7/6/4, updated 10/8/4

Open Sound Control (OSC) is a protocol for communication among computers, sound synthesizers, and other multimedia devices that is optimized for modern networking technology.

This page lists some of the ways in which OSC has been used, organized into "application area" categories, with examples. Please let us know of other projects that we can list here.

Network Architectures

In the application areas described below, collections of devices are connected with OSC in the following ways:

- Heterogenous Distributed Multiprocessing on Local Area Networks ("LAN"s)

- Machines in the same location cooperate to accomplish a single task jointly with division of labor

- Peer-to-peer LANs

- Machines in the same location operate independently while communicating with each other

- Wide-Area Networks (WANs)

- Machines in different locations operate independently while communicating with each other

- Single Machine

- Software components (such as processes, threads, plugins, subpatches...) within a single machine communicate internally with OSC

Application Area: Sensor/Gesture-Based Electronic Musical Instruments

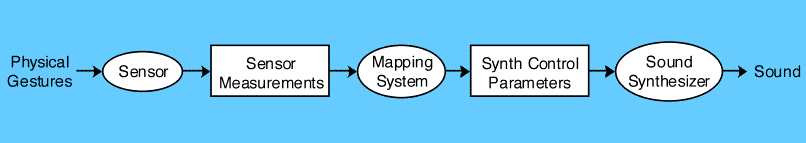

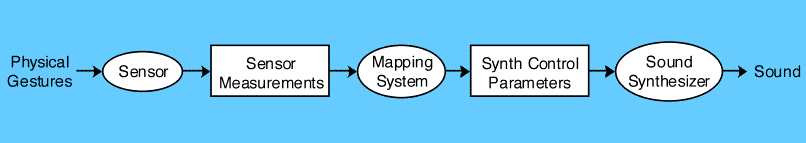

A human musician interacts with sensor(s) that detect physical activity such as motion, acceleration, pressure, displacement, flexion, keypresses, switch closures, etc. The data from the sensor(s) are processed in real time and mapped to control of electronic sound synthesis and processing.

Diagram of processes (ovals) and data (rectangles) flow in a sensor-based musical instrument.

This kind of application is often realized with Heterogenous Distributed Multiprocessing on Local Area Networks, e.g., with the synth control parameters sent over the LAN to a dedicated "synthesis server," or with the sensor measurements sent over the LAN from a dedicated "sensor server". There have also been many realizations of this paradigm using OSC within a single machine.

Examples:

- Wacom tablet controlled "scrubbing" of sinusoidal models synthesized on a synthesis server. (Wessel et al. 1997)

- The MATRIX ("Multipurpose Array of Tactile Rods for Interactive eXpression") consists of a 12x12 array of spring-mounted rods each able to move vertically. An FPGA samples the 144 rod positions at 30 Hz and transmits them serially to a PC that converts the sensor data to OSC messages used to control sound synthesis and processing. (Overholt 2001)

- In a project at the MIT Media Lab (Jehan and Schoner 2001), the analyzed pitch, loudness, and timbre of a real-time input signal control sinusoids+noise additive synthesis. In one implementation, one machine performs the real-time analysis and sends the control parameters over OSC to a second machine performing the synthesis.

- The Slidepipe

- Three projects at UIUC are based on systems consisting of real-time 3D spatial tracking of a physical object, processed by one processor that sends OSC to a Macintosh running Max/MSP for sound synthesis and processing:

- In the eviolin project (Goudeseune et al. 2001), a Linux machine tracks the spatial position of an electric violin and maps the spatial parameters in real-time to control processing of the violin's sound output with a resonance model.

- In the Interactive Virtual Ensemble project (Garnett et al. 2001), a conductor wears wireless magnetic sensors that send 3D position and orientation data at 100 Hz to a wireless receiver connected to an SGI Onyx. This machine processes the sensor data to determine tempo, loudness, and other paramaters from the conductor; these parameters are sent via OSC to Max/MSP sound synthesis software.

- VirtualScore is an immersive audiovisual environment for creating 3D graphical representations of musical material over time (Garnett et al. 2002). It uses a CAVE to render 3D graphics and to receive orientation and location information from a wand and a head tracker. Both real-time gestures from the wand and stored gestures from the “score” go via OSC to the synthesis server.

- In Stanford’s CCRMA’s Human/Computer Interaction seminar (Music 250a), students connect sensors to a special development board containing an Atmel AVR microcontroller which sends OSC messages over a serial connection to Pd (Wilson et al. 2003).

- Projects using La Kitchen's Kroonde (wireless) and Toaster (wired) general-purpose multichannel sensor-to-OSC interfaces.

- Projects using IRCAM's EtherSense sensors-to-OSC digitizing interface

- The BuckyMedia project uses 3d accelerometers employing OSC over WLAN (box developed by f0am) to transmit the movements of several geodesic, tensile or synetic structures for audiovisual interpretation.

Application Area: Mapping nonmusical data to sound

This is almost the same as the "Sensor/Gesture-Based Electronic Musical Instruments" application area above, except that the intended user isn't necessarily a musician (though the end result may be intended to be musical). Therefore the focus tends to be more on fun and experimentation rather than musical expression, and the user often interacts directly with the computer's user interface instead of special

Examples:

- Picker is software for converting visual images into OSC messages for control of sound synthesis

- Sodaconstructor is software for building, simulating, and manipulating mass/spring models with gravity, friction, stiffness, etc. Parameters of the real-time state of the model (e.g., locations of particular masses) can be mapped to OSC messages for control of sound synthesis.

- SpinOSC is software for building models of spinning objects. Properties such as size, rotation speed, etc. can be sent as OSC messages.

- Stanford’s CCRMA’s Circular Optical Object Locator (Hankins et al. 2002) is based on a rotating platter upon which users place opaque objects. A digital video camera observes the platter and custom image-processing software outputs data based on the speed of rotation, the positions of the objects, etc. A separate computer running Max/MSP receives this information via OSC and synthesizes sound.

- GulliBloon is a message-centric communication framework for advanced pseudo-realtime sonic and visual content generation, built with OSC. Clients register with a central multiplexing server to subscribe to particular data streams or to broadcast their own data.

- The LISTEN project aims to "augment everyday environments through interactive soundscapes": users wear motion-tracked wireless headphones and receive individual spatial sound based on their individual spatial behavior. This interview discusses artistic aspects.

Appliction Area: Multiple-User Shared Musical Control

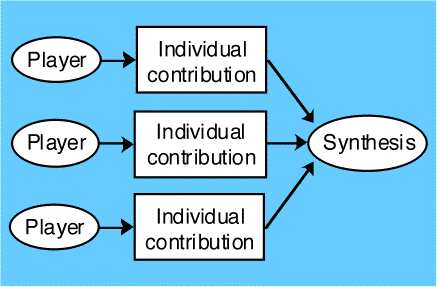

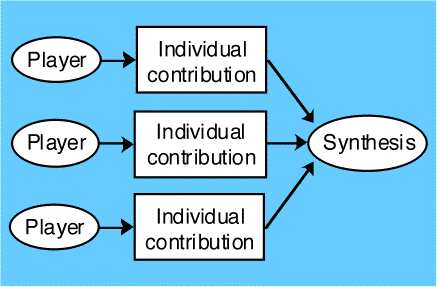

A group of human players (not necessarily each skilled musicians) each interact with an interface (e.g., via a web browser) in real-time to control some aspect(s) of a single shared sonic environment. This could be thought of as a client/server model in which multiple clients interact with a single sound server.

Multiple players influence a common synthetic sound output

Examples:

- In Randall Packer's, Steve Bradley's, and John Young's "collaborative intermedia work" Telemusic #1 (Young 2001) , visitors to a web site interact with Flash controls that affect sound synthesis in a single physical location.

- In the Tgarden project (see the f0.am or Georgia Tech sites) visitors in a space collectively affect the synthesized sound indirectly through physical interaction with sensor-equipped objects such as special clothing and large balls (as in "Mapping nonmusical data to sound").

- PhopCrop is a prototype system in which multiple users create and manipulate objects in a shared virtual space governed by laws of "pseudophysics." Each object has both a graphical and sonic representation.

- Grenzenlose Freiheit is an interactive sound installation using OSC with WLAN'd PDAs as a sound control interface for the audience.

Application Area: Web interface to Sound Synthesis

[write me]

Examples:

Application Area: Networked LAN Musical Performance

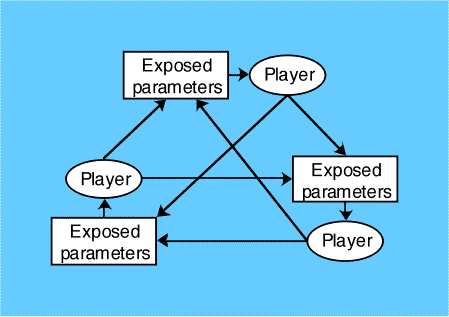

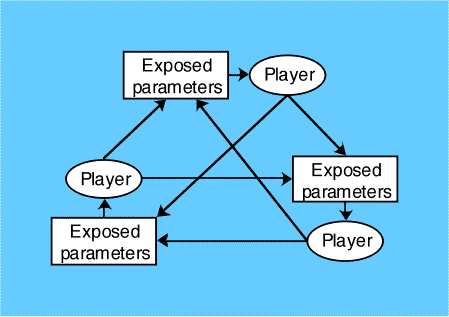

A group of musicians operate a group of computers that are connected on a LAN. Each computer is somewhat independent (e.g., it produces sound in response to local input) yet the computers control each other in some ways (e.g., by sharing a global tempo clock or by controlling some of each others' parameters.) This is somewhat analogous to multi-player gaming.

Each player can control some of the parameters of every other player

Examples:

- At ICMC 2000 in Berlin (http://www.audiosynth.com/icmc2k), a network of about 12 Macintoshes running SuperCollider synthesized sound and changed each others’ parameters via OSC, inspired by David Tudor’s composition "Rainforest."

- The Meta-Orchestra project (Impett and Bongers, 2001) is a large local-area network that uses OSC.

- Simulus use OSC over WiFi to synchronise clock and tempo information between SuperCollider and AudioMulch in their live performances. See (Bencina, 2003) for a discussion of MIDI clock synchronisation techniques which have since been applied to OSC.

Application Area: WAN performance / "Telepresence"

A group of musicians in different physical locations play together as a sort of "musical conference call". Control messages and/or audio from each player go out to all the other sites. Sound is produced at each site to represent the activities of each participant.

Examples:

- The Hub's projects (Chris Brown, Mike Berry, Grainwave...)

- Quintet.net

- Randall Packer's projects

Application Area: Virtual Reality

Examples:

- CREATE's Distributed Sensing, Computation, and Presentation ("DSCP") systems. Inputs are multiple VR head sensors, hand trackers, etc., from multiple users in a shared virtual world. Dozens of computers interpret gestures, run simulations, render audio+video. Everything communicates with CORBA and OSC. (Pope 2002).

- UCLA projects

Enabling Technology: Wrapping Other Protocols Inside OSC

People often convert data from other protocols into OSC for reasons including easier network transport, homogeneity of message formats, compatibility with existing OSC servers, and the possibility of self-documenting symbolic parameter names.

Examples

- MIDI over OSC (e.g., for WAN performance). For example, Michael Zbyszynski's Remote MIDI patches for Max, C. Ramakrishnan's Occam (OSC->MIDI), G. Kling's Macco (MIDI->OSC, part of CSL).

- Converting "messy", "inconvenient" data from sensors to OSC format (e.g., for Sensor/Gesture-Based Electronic Musical Instruments)

References

Bencina, R. (2003), PortAudio and Media Synchronisation. In Proceedings of the Australasian Computer Music Conference, Australasian Computer Music Association, Perth, pp. 13-20.

Garnett, G.E., Jonnalagadda, M., Elezovic, I., Johnson, T. and Small, K., Technological Advances for Conducting a Virtual Ensemble, in International Computer Music Conference, (Habana, Cuba, 2001), 167-169.

Garnett, G.E., Choi, K., Johnson, T. and Subramanian, V., VirtualScore: Exploring Music in an Immersive Virtual Environment, in Symposium on Sensing and Input for Media-Centric Systems (SIMS), (Santa Barbara, CA, 2002), 19-23. (pdf)

Goudeseune, C., Garnett, G. and Johnson, T., Resonant Processing of Instrumental Sound Controlled by Spatial Position, in CHI '01 Workshop on New Interfaces for Musical Expression (NIME'01), (Seattle, WA, 2001), ACM SIGCHI. (pdf)

Hankins, T., Merrill, D. and Robert, J., Circular Optical Object Locator, Proc. Conference on New Interfaces for Musical Expression (NIME-02), (Dublin, Ireland, 2002), 163-164.

Impett, J. and Bongers, B., Hypermusic and the Sighting of Sound - A Nomadic Studio Report, Proc. International Computer Music Conference, (Habana, Cuba, 2001), ICMA, 459-462.

Jehan, T. and Schoner, B., An Audio-Driven Perceptually Meaningful Timbre Synthesizer, in Proc. International Computer Music Conference, (Habana, Cuba, 2001), 381-388. (pdf)

Overholt, D., The MATRIX: A Novel Controller for Musical Expression, Proc. CHI '01 Workshop on New Interfaces for Musical Expression (NIME'01), (Seattle, WA , 2001). (pdf)

Pope, S.T. and Engberg, A., Distributed Control and Computation in the HPDM and DSCP Projects, in Proc. Symposium on Sensing and Input for Media-Centric Systems (SIMS), (Santa Barbara, CA, 2002), 38-43. (pdf)

Wessel, David, Matthew Wright, and Shafqat Ali Khan. Preparation for Improvised Performance in Collaboration with a Khyal Singer, in Proc. International Computer Music Conference (Ann Arbor, Michigan, 1998), ICMA, 497-503. (html)

Wilson, Scott, Michael Gurevich, Bill Verplank, and Pascal Stang. Microcontrollers in Music HCI Instruction: Reflections on Our Switch to the Atmel AVR Platform, In Proc. of the Conference on New Interfaces for Musical Expression, (Montreal, 2003) 24-29.

Young, J.P., Using the Web for Live Interactive Music, Proc. International Computer Music Conference, (Habana, Cuba, 2001), 302-305.